让我们考虑一个示例,其中 RAMCloud 存储系统的 Buffer 类经过优化,以使大多数常见操作的速度提高约 2 倍。

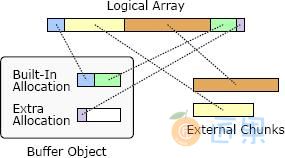

RAMCloud 使用 Buffer 对象管理可变长度的内存数组,例如远程过程调用的请求和响应消息。缓冲区旨在减少内存复制和动态存储分配的开销。缓冲区存储看似线性的字节数组,但是为了提高效率,它允许将底层存储划分为多个不连续的内存块,如图 20.1 所示。通过附加数据块来创建缓冲区。每个块都是外部的或内部的。如果块在外部,则其存储由调用方拥有;缓冲区保留对此存储的引用。外部块通常用于大型块,以避免内存复制。如果内部有块,则 Buffer 拥有该块的存储;调用者提供的数据将被复制到缓冲区的内部存储器中。每个缓冲区包含一个小的内置分配,这是一个内存块,可用于存储内部块。如果此空间已用完,则缓冲区将创建其他分配,销毁缓冲区时必须释放这些分配。内部块对于内存复制成本可忽略不计的小块很方便。图 20.1 显示了具有 5 个块的 Buffer:第一个块是内部的,接下来的两个块是外部的,最后两个块是内部的。

图 20.1:Buffer 对象使用内存块的集合来存储看似线性字节数组。内部块由 Buffer 拥有,并在 Buffer 销毁时释放;外部块不属于缓冲区。

Buffer 类本身代表“根本性的修补程序”,因为它消除了没有它就需要的昂贵的内存副本。例如,在 RAMCloud 存储系统中组装包含短标头和大对象内容的响应消息时,RAMCloud 使用带有两个块的 Buffer。第一个块是包含头的内部块;第二个块是一个外部块,它引用 RAMCloud 存储系统中的对象内容。可以在不复制大对象的情况下将响应收集到缓冲区中。

除了允许不连续块的基本方法外,我们没有尝试在原始实现中优化 Buffer 类的代码。但是,随着时间的流逝,我们注意到缓冲区越来越多地被使用。例如,在每个远程过程调用的执行期间,至少创建四个缓冲区。最终,很明显,加速 Buffer 的实现可能会对整体系统性能产生显着影响。我们决定看看是否可以提高 Buffer 类的性能。

Buffer 最常见的操作是使用内部块为少量新数据分配空间。例如,在为请求和响应消息创建标题时,就会发生这种情况。我们决定将此操作用作优化的关键路径。在最简单的情况下,可以通过扩大 Buffer 中最后存在的块来分配空间。但是,只有在最后一个现有块位于内部,并且其分配中有足够的空间来容纳新数据时,才有可能这样做。理想的代码将执行一次检查以确认简单方法是否可行,然后将调整现有块的大小。

图 20.2 显示了关键路径的原始代码,该代码以 Buffer :: alloc 方法开头。在最快的情况下,Buffer :: alloc 调用 Buffer :: allocateAppend,后者调用 Buffer :: Allocation :: allocateAppend。从性能的角度来看,此代码有两个问题。第一个问题是要单独检查许多特殊情况:

- Buffer::allocateAppend 检查缓冲区当前是否有任何分配。

- 代码检查两次以查看当前分配是否有足够的空间容纳新数据:一次在 Buffer::Allocation::allocateAppend 中,一次在其返回值由 Buffer::allocateAppend 测试时。

- Buffer::alloc 测试 Buffer::allocAppend 的返回值,以再次确认分配成功。

此外,该代码没有尝试直接扩展最后一个块,而是在不考虑最后一个块的情况下分配了新空间。然后,Buffer::alloc 检查该空间是否恰好与最后一块相邻,在这种情况下,它将新空间与现有块合并。这导致其他检查。总体而言,此代码测试关键路径中的 6 种不同条件。

原始代码的第二个问题是它具有太多的层,所有层都很浅。这既是性能问题,也是设计问题。关键路径除了对 Buffer::alloc 的原始调用之外,还进行了另外两个方法调用。每个方法调用花费额外的时间,并且每个调用的结果必须由其调用者检查,这导致需要考虑更多特殊情况。第 7 章讨论了当您从一层传递到另一层时,抽象通常应该如何变化,但是图 20.2 中的所有三种方法都具有相同的签名,并且它们提供了基本相同的抽象。这是一个危险信号。Buffer::allocateAppend 几乎是一个传递方法;它的唯一作用是在需要时创建新的分配。额外的层使代码既慢又复杂。

为了解决这些问题,我们重构了 Buffer 类,使其设计围绕最关键性能的路径进行。我们不仅考虑了上面的分配代码,还考虑了其 他几种常用的执行路径,例如检索当前存储在 Buffer 中的数据的字节总数。对于这些关键路径中的每一个,我们试图确定在通常情况下必须执行的最少代码量。然后,我们围绕这些关键路径设计了课程的其余部分。我们还应用了本书中的设计原则来简化整个类。例如,我们消除了浅层并创建了更深的内部抽象。重构的类比原始版本小 20%(1476 行代码,而原始版本为 1886 行)。

图 20.2:使用内部块在 Buffer 的末尾分配新空间的原始代码。

图 20.3:用于在 Buffer 的内部块中分配新空间的新代码。

图 20.3 显示了用于在 Buffer 中分配内部空间的新关键路径。新代码不仅速度更快,而且更容易阅读,因为它避免了浅层抽象。整个路径使用单一方法处理,并且使用单一测试排除所有特殊情况。新代码引入了新的实例变量 extraAppendBytes,以简化关键路径。此变量跟踪缓冲区中最后一个块之后立即有多少未使用空间可用。如果没有可用空间,或者 Buffer 中的最后一个块不是内部块,或者 Buffer 根本不包含任何块,则 extraAppendBytes 为零。图 20.3 中的代码表示处理这种常见情况的最少代码量。

注意:只要需要,就可以通过重新计算各个块的总缓冲区长度来消除对 totalLength 的更新。但是,这种方法对于具有许多块的大型 Buffer 而言将是昂贵的,并且获取 Buffer 的总长度是另一种常见的操作。因此,我们选择添加少量额外的开销来分配,以确保 Buffer 长度始终立即可用。

新代码的速度约为旧代码的两倍:使用内部存储将 1 字节字符串附加到缓冲区的总时间从 8.8 ns 降低到 4.75 ns。由于修订,许多其他缓冲区操作也加快了速度。例如,构建新缓冲区,在内部存储中附加一小块并销毁缓冲区所需的时间从 24 ns 降至 12 ns。

Let’s consider an example, in which the Buffer class of the RAMCloud storage system was optimized to achieve a speedup of about 2x for the most common operations.

RAMCloud uses Buffer objects to manage variable-length arrays of memory, such as request and response messages for remote procedure calls. Buffers are designed to reduce overheads from memory copying and dynamic storage allocation. A Buffer stores what appears to be a linear array of bytes, but for efficiency it allows the underlying storage to be divided into multiple discontiguous chunks of memory, as shown in Figure 20.1. A Buffer is created by appending chunks of data. Each chunk is either external or internal. If a chunk is external, its storage is owned by the caller; the Buffer keeps a reference to this storage. External chunks are typically used for large chunks in order to avoid memory copies. If a chunk is internal, the Buffer owns the storage for the chunk; data supplied by the caller is copied into the Buffer’s internal storage. Each Buffer contains a small built-in allocation, which is a block of memory available for storing internal chunks. If this space is exhausted, then the Buffer creates additional allocations, which must be freed when the Buffer is destroyed. Internal chunks are convenient for small chunks where the memory copying costs are negligible. Figure 20.1 shows a Buffer with 5 chunks: the first chunk is internal, the next two are external, and the final two chunks are internal.

Figure 20.1: A Buffer object uses a collection of memory chunks to store what appears to be a linear array of bytes. Internal chunks are owned by the Buffer and freed when the Buffer is destroyed; external chunks are not owned by the Buffer.

The Buffer class itself represents a “fundamental fix,” in that it eliminates expensive memory copies that would have been required without it. For example, when assembling a response message containing a short header and the contents of a large object in the RAMCloud storage system, RAMCloud uses a Buffer with two chunks. The first chunk is an internal one that contains the header; the second chunk is an external one that refers to the object contents in the RAMCloud storage system. The response can be collected in the Buffer without copying the large object.

Aside from the fundamental approach of allowing discontiguous chunks, we did not attempt to optimize the code of the Buffer class in the original implementation. Over time, however, we noticed Buffers being used in more and more situations; for example, at least four Buffers are created during the execution of each remote procedure call. Eventually, it became clear that speeding up the implementation of Buffer could have a noticeable impact on overall system performance. We decided to see if we could improve the performance of the Buffer class.

The most common operation for Buffer is to allocate space for a small amount of new data using an internal chunk. This happens, for example, when creating headers for request and response messages. We decided to use this operation as the critical path for optimization. In the simplest possible case, the space can be allocated by enlarging the last existing chunk in the Buffer. However, this is only possible if the last existing chunk is internal, and if there is enough space in its allocation to accommodate the new data. The ideal code would perform a single check to confirm that the simple approach is possible, then it would adjust the size of the existing chunk.

Figure 20.2 shows the original code for the critical path, which starts with the method Buffer::alloc. In the fastest possible case, Buffer::alloc calls Buffer:: allocateAppend, which calls Buffer::Allocation::allocateAppend. From a performance standpoint, this code has two problems. The first problem is that numerous special cases are checked individually:

- Buffer::allocateAppend checks to see if the Buffer currently has any allocations.

- The code checks twice to see if the current allocation has enough room for the new data: once in Buffer::Allocation::allocateAppend, and again when its return value is tested by Buffer::allocateAppend.

- Buffer::alloc tests the return value from Buffer::allocAppend to confirm yet again that the allocation succeeded.

Furthermore, rather than trying to expand the last chunk directly, the code allocates new space without any consideration of the last chunk. Then Buffer::alloc checks to see if that space happens to be adjacent to the last chunk, in which case it merges the new space with the existing chunk. This results in additional checks. Overall, this code tests 6 distinct conditions in the critical path.

The second problem with the original code is that it has too many layers, all of which are shallow. This is both a performance problem and a design problem. The critical path makes two additional method calls in addition to the original invocation of Buffer::alloc. Each method call takes additional time, and the result of each call must be checked by its caller, which results in more special cases to consider. Chapter 7 discussed how abstractions should normally change as you pass from one layer to another, but all three of the methods in Figure 20.2 have identical signatures and they provide essentially the same abstraction; this is a red flag. Buffer::allocateAppend is nearly a pass-though method; its only contribution is to create a new allocation if needed. The extra layers make the code both slower and more complicated.

To fix these problems, we refactored the Buffer class so that its design is centered around the most performance-critical paths. We considered not just the allocation code above but several other commonly executed paths, such as retrieving the total number of bytes of data currently stored in a Buffer. For each of these critical paths, we tried to identify the smallest amount of code that must be executed in the common case. Then we designed the rest of the class around these critical paths. We also applied the design principles from this book to simplify the class in general. For example, we eliminated shallow layers and created deeper internal abstractions. The refactored class is 20% smaller than the original version (1476 lines of code, versus 1886 lines in the original).

Figure 20.2: The original code for allocating new space at the end of a Buffer, using an internal chunk.

Figure 20.3: The new code for allocating new space in an internal chunk of a Buffer.

Figure 20.3 shows the new critical path for allocating internal space in a Buffer. The new code is not only faster, but it is also easier to read, since it avoids shallow abstractions. The entire path is handled in a single method, and it uses a single test to rule out all of the special cases. The new code introduces a new instance variable, extraAppendBytes, in order to simplify the critical path. This variable keeps track of how much unused space is available immediately after the last chunk in the Buffer. If there is no space available, or if the last chunk in the Buffer isn’t an internal chunk, or if the Buffer contains no chunks at all, then extraAppendBytes is zero. The code in Figure 20.3 represents the least possible amount of code to handle this common case.

Note: the update to totalLength could have been eliminated by recomputing the total Buffer length from the individual chunks whenever it is needed. However, this approach would be expensive for a large Buffer with many chunks, and fetching the total Buffer length is another common operation. Thus, we chose to add a small amount of extra overhead to alloc in order to ensure that the Buffer length is always immediately available.

The new code is about twice as fast as the old code: the total time to append a 1-byte string to a Buffer using internal storage dropped from 8.8 ns to 4.75 ns. Many other Buffer operations also speeded up because of the revisions. For example, the time to construct a new Buffer, append a small chunk in internal storage, and destroy the Buffer dropped from 24 ns to 12 ns.